Welcome to MULTISIMO!

MULTImodal and MULTIparty Social Interactions MOdelling

More often than usual, interaction with avatars, robots or chatbots is "unnatural", especially when entailing more than one modalities. This is caused by these artificial machines lacking cognitive and social skills. By and large and while not noticing it explicitly during human-to-human interactions, this is largely the gap that needs to be covered for machines/computers/robots to be perceived as interacting in a more human-like manner.

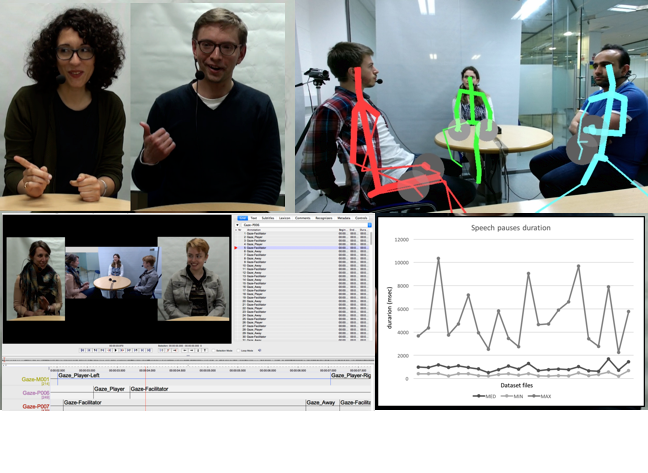

MULTISIMO addresses the modelling of aspects of multimodal communicative human behaviour during natural interactions in multiparty settings. The project built on models trained on a new dataset of multimodal communicative human behavior - obtained throughout rigorous recording of natural interactions in multiparty settings session carried out in the scope of the project combined with state-of-the-art techniques in multimodal communication and language technology, leading to entirely fresh views on human-machine interaction. The project has also innovated in the field of affective computing and behavioral analytics, through investigation of perception and automatic detection of psychological variables, group leadership and emotion-related features in group interaction through exploitation of linguistic, acoustic and visual features.

In deriving accurate multimodal interaction models, MULTISIMO has produced a multimodal corpus comprising human-human interaction recordings and related annotations, using a concise experimental protocol. This corpus is made freely available under certain T&Cs for scientific research purposes.